Using GitHub Actions, not for deployments...

K

21 Oct 2021

•

2 min read

Recently, I was upgrading a project which helps to find the organisation listed in the UK that are registered as licensed sponsors. The list is available on the gov website and at the time of writing this article, it had over 48296 records!! The list gets updated ~daily as more organisations are applying for a new license or are removed because of expired licenses.

Furthermore, the records are duplicate or not relevant to your skills as the route for each organisation can be of type:

- Skilled Worker

- Creative Worker

- Intra-company Routes

- Charity Worker

- and many more...

Work Sponsors parse this list and filter for the "Skilled Worker" category. You can search a company by name or location or any keyword and it will list all the matching entries in an adaptive UI built on Angular material theme. The updated MVP product only parse the CSV file from the gov website but the idea of this website is to get all the details about the company and job opening so you can make the right choice.

Distributed Architecture

When we started this project we build a microservice-based solution where an independent API was responsible to get the updated list from the gov website. It was deployed on the GCP cloud and had everything a modern infrastructure has. Over time, The cost of cloud deployment became a big blocker.

While upgrading the project and only planning to focus on the MVP, I automated the deployment to GitHub Pages (free for public repo) using GitHub Actions (1000 runs for private repositories). The GitHub Pages only allow static hosting which means no backend service to check for the updated list and I hate manual menial tasks like updating the CSV... Daily...

There is more to...

GitHub Actions than you think and they are free to run on any public repository. I knew I am going to use GitHub actions to automate the fetch and update sponsor list.

And an eternity later, I manage to build a GitHub Action which looks for the updated CSV link (the file link changes to the date-time), then downloads the file to the repository and commits the changes. The UI always have an updated list of organisations. Oh, did I tell you it is scheduled to run every 10 minutes :)

Tech Tech

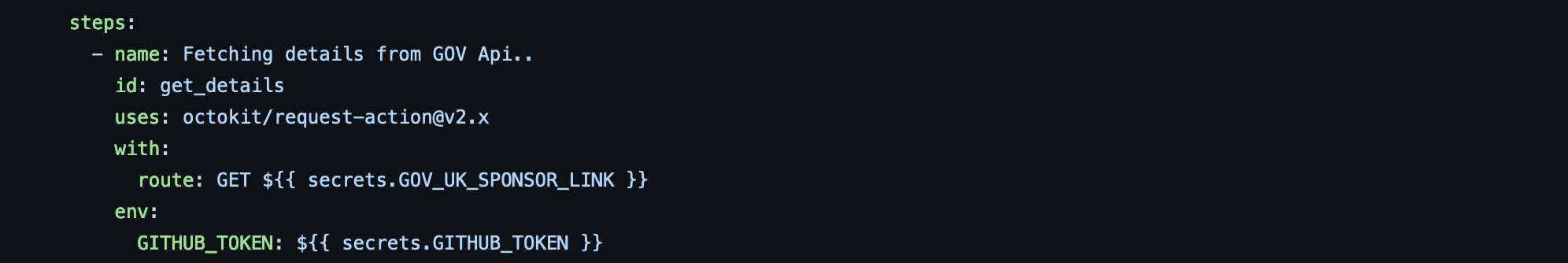

First, to get the details from the gov website as the CSV link is not static and changes to date and time. I have used the octokit request action for the get call.

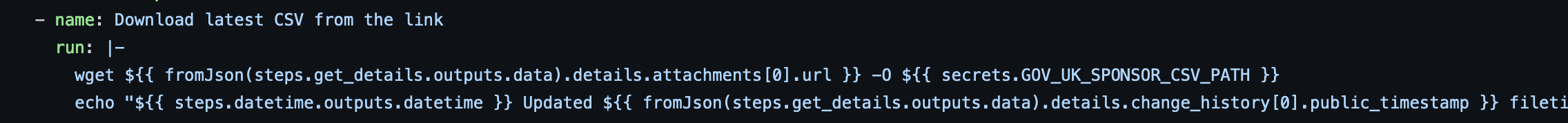

Now to download the file, use the link from the previous step and save it to the nominated location.

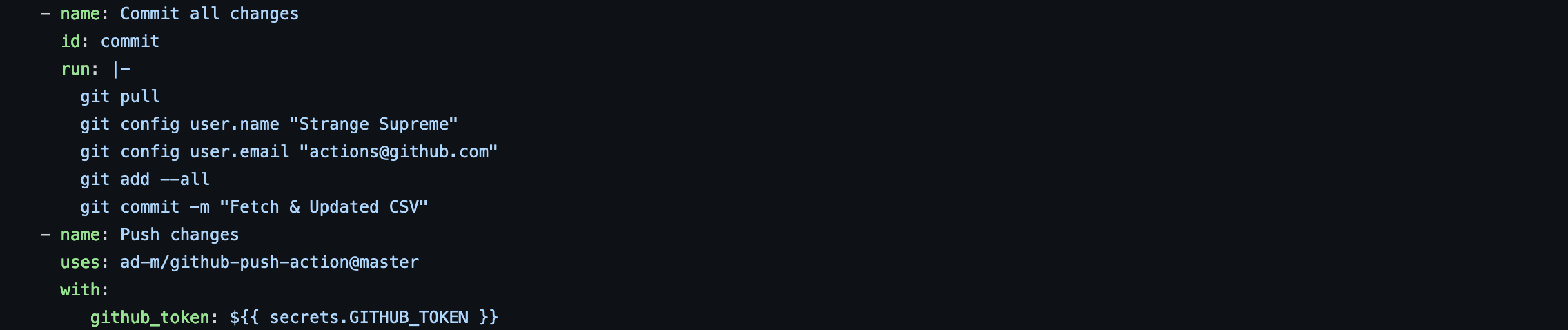

Commit & push

And this is done in 3 simple steps. There are a few more details like DateTime and setting the timestamp.

Here is the full version.

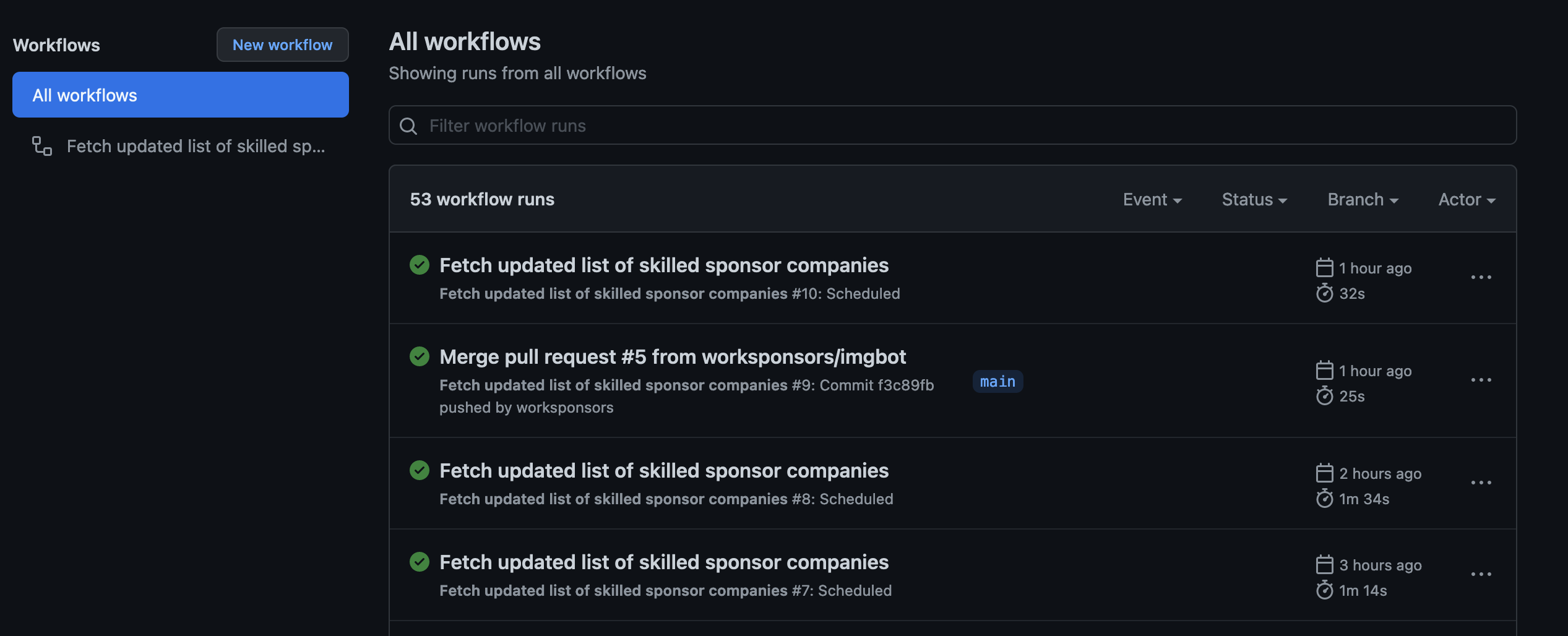

To end this article, here is the screengrab of the beautiful scheduled job -

So next time you think about GitHub action... think more than deployments

K

A skilled focused and motivated professional with 11+ years of experience as a full-stack .net cloud developer, consultant and team lead across a range of Agile centric delivery teams. Profoundly experienced in presenting both frontend and backend solutions by applying the most advanced tech stack. Highly adaptive to diverse working environments and a fast learner of varied technologies.

See other articles by K

WorksHub

Jobs

Locations

Articles

Ground Floor, Verse Building, 18 Brunswick Place, London, N1 6DZ

108 E 16th Street, New York, NY 10003

Subscribe to our newsletter

Join over 111,000 others and get access to exclusive content, job opportunities and more!