What Is Tokenization? | How It Works

Fawzan Hussain

27 Oct 2021

•

11 min read

Introduction

With each passing second, we are just a click away to complete digitalization. From our cash to our inventories to our bank, everything is being done digitally. Especially after the pandemic hit us, every product found a way to make their services accessible and digital. And despite the daily need for such online transactions, it's undeniable that frauds are increasing as well. For online transactions, your card number, PIN etc., pass through multiple authentication points, which increases the risks for a mishap. Even with multiple one-time passwords, verifications and cyber security awareness, one click can take away all your data and money. Even frauds are turning smarter.

Most people use credit/debit cards and use them while paying. Do you feel safe while paying? Do you feel safe while giving your information to these merchants?

To avoid all this, Tokenization was introduced as a safe way of exchange.

History of Tokenization

Tokens have been present physically for ages. Its origins can be traced to the early systems of currency, where coin tokens were used. A token is a thing or a voucher which acts as a medium or representation for goods and services. It can also be given in promotional offers or as a gift.

Tokens are also used in the modern world in places like casinos, subways or the metro, where they are used as a replacement for money, for example, casino chips, metro tokens, subway tokens etc. These tokens denote that "token" is a legal entity holding the right of ownership instead of the currency.

The concept of digitalization of tokens first came when other non-sensitive data were replacing sensitive data. This use of tokens can be witnessed as early as the 1970s.

But in 2001, TrustCommerce found a way to introduce the world with the use of digital token. They made use of digital Tokenization by protecting cardholders' sensitive data to make the payment safe and secure and protect the credit cardholder information. Before this was introduced, merchants would keep the data in their servers, and if the servers were hacked or anyone had access to the system, they could gain this sensitive information easily. This system eliminated any breach of data from the merchant's side as they didn't require to keep this sensitive information anymore, thus increasing the security.

What is Tokenization

Tokenization is a data protection technology that can help satisfy security and compliance with requests without sacrificing the business utility of sensitive data. How does this work?

Tokenization is exchanging sensitive values or data for non-sensitive values or data, which is called tokens. These tokens are indecipherable, which means they cannot be reversed to reveal the original data. Therefore, even in a breach, the organization or data is safe from data theft.

Tokenization helps in minimizing the amount of data an organization needs to keep with itself. In addition, it provides immense security with minimal cost and complexity. Therefore, digital Tokenization has become popular with credit cards and e-commerce.

What is a token

A piece of data that replaces another data, which is more valuable and sensitive, is known as a token. Therefore, the token in itself doesn't hold much value; it's the role it plays that holds a great significance.

For example, when you go to a casino, you get casino chips, which are tokens. Each casino chip denotes a certain amount of money on the table with which you can bet. These chips are used instead of money because if a bundle of cash was used or kept on a table, the chances of losing them are high.

How does data tokenization work?

Tokenization at its most basic level works by replacing the sensitive value with a surrogate or temporary value. By replacing the data with a surrogate value, a user ensures that any hacker or thief cannot use the information for any use. A surrogate value can be created easily, as it's coming up with a random string of numbers. The token is assigned a random number. Therefore, an intruder can't decrypt the original value since there is no logic or mathematical reasoning behind these surrogate values. But this random string or number, i.e. the surrogate value, can be matched back to the original string using a database.

Whenever you swipe your credit card at a terminal, you give the merchant your sensitive information like the Primary account number (PAN), your full name, address, and date of your credit card expiration. With the help of Tokenization, you now need only to give this fake value, i.e. the surrogate value, to the merchant.

What is the purpose of Tokenization?

The major goal of Tokenization is not to store any sensitive information as a token is replacing it. The information you give by using Tokenization is not usable even if it's hacked. It is not a security system, but the fact that a token cannot be traced back to its original value makes it secure and helps prevent data breaches.

The main purpose of Tokenization is to minimize or eliminate the risk of theft of customer data, reduce legal actions of it, avoid expensive procedures and reimbursement for the damage.

PCI tokenization

The payment card industry ( PCI) follows its standardized rules, which no longer allow credit card numbers to be stored by a retailer. Therefore no retailer should have such sensitive data in their server or database after a transaction is made. Therefore, a merchant needs to invest in installing expensive, end to end encryption methods or buy a service of payment gateway or outsource their transaction from a service provider who is PCI compliant. In the last scenario, it's the service provider's responsibility to ensure that cardholder's data is safe and secure.

In 2004, Visa, American Express, MasterCard banded together to form a set of regulations that all retailers should follow when accepting payments by their cards; these are now known as PCI DSS standards. The Payment Card Industry Data Security System helps in protecting the PAN of your credit card. According to PCI DSS, the merchants should protect a cardholder's data during the transaction, and if failing to do so, the merchant can be fined or lose authority. With the help of Tokenization, the merchants now store tokens instead of PAN data, therefore, complying with PCI DSS.

Types of Tokenization used in NLP

Natural language processing(NLP) is a software programme where natural language is processed, which means it can be used for grammar error detection, language translations, fake news etc. With Tokenization in NLP, it breaks texts into small chunks of tokens. Tokenization aids in the interpretation of the text by analyzing the sequence it should be in. Any raw text can be tokenized; for example, "Bird is flying" can be tokenized into these three: bird, is, flying. Tokenization can be done word by word or either by sentences. If the tokens are separated by word, it's called word tokenization. If the tokens are sentences, it's called sentence tokenization.

Here are some tokenization techniques used in NLP:

White space tokenization:

The simplest and fastest way of Tokenization. As the name suggests, the white space Tokenization tokenizes the words as soon as it encounters white space between them. Therefore, it's only useful for languages where white spaces indicate the separation of two different words, like in English.

Dictionary-based Tokenization:

In this method, the words are tokenized based on the words already found in the dictionary. If a word is found not in the dictionary, then a special method is used to tokenize it.

Rule-based Tokenization:

A set of rules are created and followed to tokenize word in this process.

Penn tree tokenization:

Tokenization separates the sentences into different tokens based on punctuation, clitics and hyphen.

Spacy tokenizer:

This is an easily customizable and modern method of Tokenization that helps to tokenize special characters or specific tokens that you need to be segmented.

Moses Tokenizer:

This token is a collection of complex normalization and segmentation logic in English languages.

Subword tokenization:

In this form of Tokenization, if a word is occurring many times, then It'll be identified as a token with a unique id, and the different forms of that word will be given sub word tokens. For example, if great is frequently appearing, then it'll be given a unique id. And the rarer form of great like greater and greatest, which are used less frequently, will be given sub word tokens' er' and 'est'.

Difference between Tokenization and encryption

When it comes to securing sensitive information, Tokenization and encryption are often paired as a pea in a pod. While these two methods can help you secure information and protect your organization, Tokenization and encryption are not the same. Depending on the situation, only one preferred method should be used. Since both Tokenization and encryption have their strengths and weaknesses.

Encryption is a process in which an algorithm is used to make the plain text unreadable with the help of an encryption key. The unreadable text is called ciphertext. To find the original plain text, both the algorithm and the encryption key are required to decrypt the information. Today, most leading companies use either a built-in encryption tool or a third party tool to encrypt and secure their data from any data breach. In encryption, there are two types of keys, symmetric key and asymmetric key. In symmetric-key encryption, the same key is used while encrypting the plain text with the help of an algorithm and while decrypting. Therefore, if the key is compromised, the original data can be accessed. In asymmetric key encryption, also called public-key encryption, the first key used for encryption is openly shared. Since even if the first key is with someone, they cannot access the original data.

In encryption, the rotation of keys regularly is appreciated despite the method of encryption. It's because even if a key is compromised, the data linked to that key is vulnerable. While the other data will be safe, therefore, having multiple keys for encryption is encouraged.

Unlike encryption, in Tokenization, there is no key or no algorithm, no mathematical reasoning to find the original sensitive data. Tokenization uses a token vault; these token vaults store the original sensitive data while a token replaces that value. The real data inside the vault is often secured by encryption.

Tokenization

- the sensitive data is replaced by tokens

- tokens are random numbers that have no meaningful value even if compromised in a security breach

- original data is shared but in encrypted form

- the format can be maintained without any security risk

- you cannot share the data without compromising the token vault

Encryption

- the sensitive data is secured in encrypted form with the help of the algorithm

- you cannot share the data without compromising the token vault

- original data never leaves

- format-preserving encryption has lower security in encryption

- data can be shared with a third party who has the encryption key

In comparison to encryption, Tokenization is more secure, especially when it comes to digital mode of payment where the card is not involved. At the same time, encryption has the strongest security measures for card information protection when the card is involved physically.

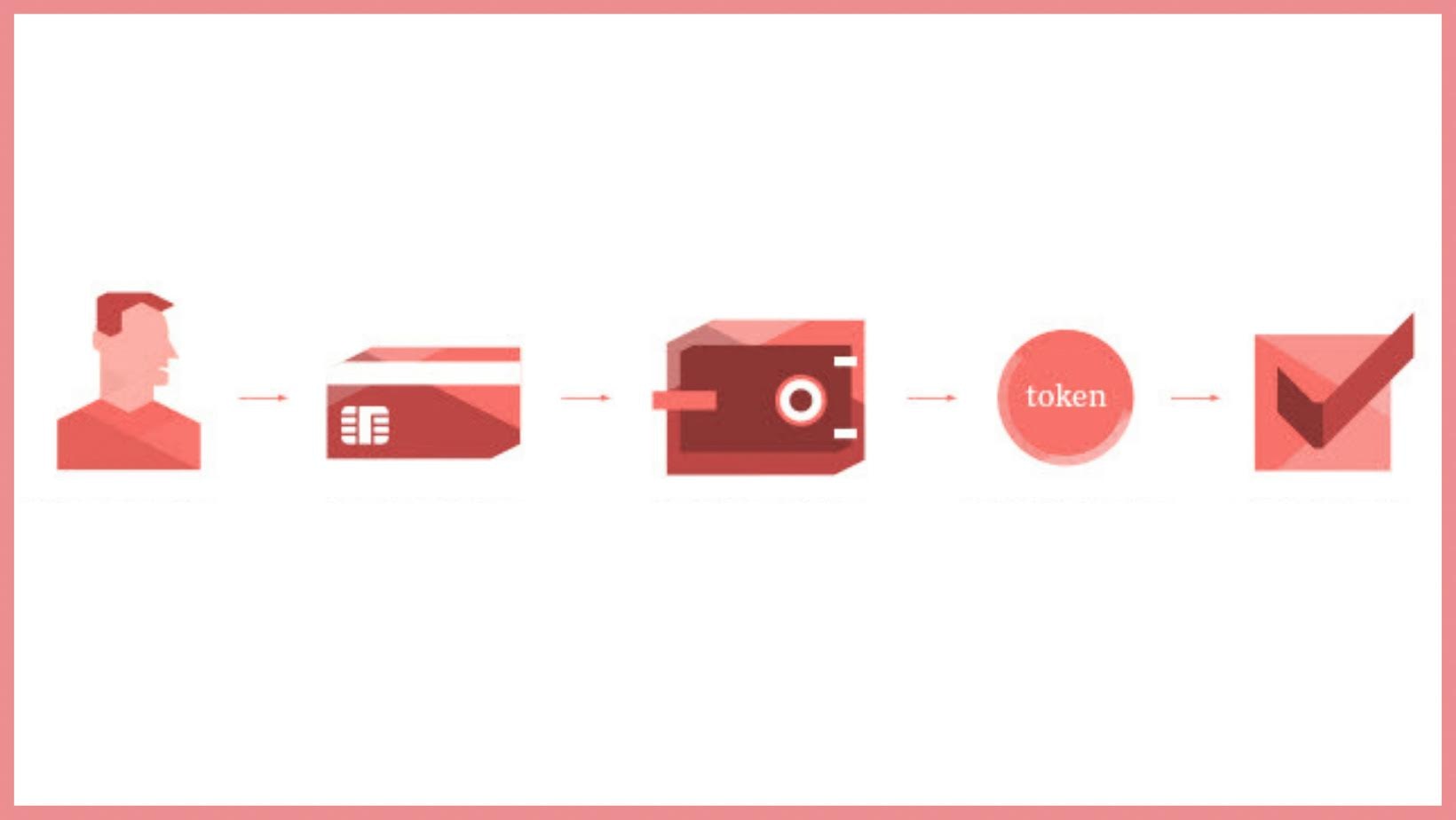

What is payment tokenization?

When a person purchases something online, they are buying a laptop from an online website like Amazon. They'll have to share card details to the website to make that purchase, and a One time password (OTP) will be shared to authenticate the process. To make the next purchase smoother, these online websites save the card details. If the website is hacked, then sensitive information regarding the customer's card details is also vulnerable.

With the help of Tokenization, the card details are exchanged with the token code. Instead of the card, the token is used while making a purchase. For example: When a merchant supports mobile payment, you make a payment using Google pay, Apple pay etc., these companies have made Tokenization available and accessible for everyone.

For example, for the laptop you purchased. You opted to pay with Google pay. The first thing that happens is, you share your PAN with Google. Then Google communicates with your bank and asks the bank to provide a token so you can use that token with the merchant. The bank agrees and provides a token to your phone, and your phone gives it to the merchant using token NFC( near field communication). The merchant checks the token with the Acquirer, the Acquirer checks with the bank. If the token matches the original data stored in the vault by the bank, the bank gives a green flag. Then the Acquirer, in return, gives the merchant a green signal, and you receive a notification that the payment is made. The whole transaction happens without your merchant knowing your PAN, and that's the power of Tokenization.

Benefits of Tokenization

- Credit and debit card payments are made secure with Tokenization. It's a secure way to reduce any risk to sensitive information and reduce the risks of losing information to hackers.

- Tokenization is more PCI compliant and causes fewer issues legally for merchants compared to encryption. It's more compatible with the laws.

- Tokenization is less expensive than encryption. Therefore, fewer resources are exhausted while ensuring the security of sensitive information.

- Tokenization reduces the risks that happen when data is breached. For example, A business holds financial data with itself, but that data is stored as tokens. Then in a case of a security breach, the organization that's responsible for data will suffer fewer consequences.

- Tokenization reduces the exposure of data. The sensitive data is stored in tokenization servers or token vaults, which are immensely protected and encrypted. Hence, reducing the exposure of data.

- Tokenization helps in reducing the steps involved while the merchant ensures their organization complies with PCI DSS.

- Tokenization has made payments more secure and accessible. For example, third party lets, digital payments, one-click payments and cryptocurrencies have become more accessible due to Tokenization. This enhances the trust between customer and merchant and encourages the customer to shop without any stress for a security breach.

Drawbacks of Tokenization

As Tokenization involves many steps to ensure the security measures are taken, it complexes your IT infrastructure. Additionally, the transaction takes more time as the customer's sensitive data must go through multiple checks for detokenization and retokenization for the payment to be authentic. Also, sometimes, while doing transactions, a payment processor can lock you in. Therefore, it takes a lot of time to reach and Authorize the payment.

In addition, not every merchant supports Tokenization. Even though Tokenization has become more accessible, it's still not available everywhere.

Despite the security tokenization offers, it doesn't eliminate the risks. It's also vital that the vendor you choose for Tokenization is safe or secure while using third party vaults.

Token and annotation

As mentioned previously, a token holds raw text. An annotator token allows the user to see an embed link; in this link, the user can annotate. Annotations are remarks, a way of making a note or a comment on something. An annotator token helps keep track of annotations made by different users.

Tokenization and blockchain

As you can see, Tokenization is a very useful and secure tool in PCI, but with the fusion of Tokenization and blockchain, even more, amazing feats can be conquered. Tokenization in blockchain can be referred to as a blockchain token, also called an asset or security token. When a token is assigned to a blockchain, then the issuance of the token is recorded by the blockchain. The blockchain records every single movement of the token; this revied is maintained in a ledger. Blockchain tokens are real assets; the blockchain tokens are treated as real digital money called cryptocurrency; they have no physical form.

Traditionally, the banks are responsible for providing a ledger for every transaction that you make. But in the case of blockchain or token economy, the responsibility and power are shifted to the individuals involved, therefore decentralizing the power and shifting to the individual level.

Blockchain eliminates duplication, its most unique feature. Anything present digitally can be duplicated, whether it's an image, a video or an email. But blockchain eliminates the double-spending problem, as everyone gets the trial and their copy of the ledger. For example, Every bitcoin is a token; whenever a bitcoin is used or spent, the ledger given to each member gets updated and shows that record. Therefore, no token or no bitcoin can be spent twice.

Conclusion,

Tokenization is a reliable method to ensure data security. The real potential of Tokenization is yet to unleash its complete power. With the increased usage of blockchain and blockchain technology, we can expect Tokenization to be more accessible with more people shifting to mobile payment.

Fawzan Hussain

An SEO consultant and the CEO of Seooptimizekeywords.com. With over a decade of experience in the industry, I'm passionate about helping businesses achieve their online marketing goals through effective SEO strategies.

See other articles by Fawzan

WorksHub

Jobs

Locations

Articles

Ground Floor, Verse Building, 18 Brunswick Place, London, N1 6DZ

108 E 16th Street, New York, NY 10003

Subscribe to our newsletter

Join over 111,000 others and get access to exclusive content, job opportunities and more!